This article was originally published by Andreas Bender and Isidro Cortes-Ciriano in Drug Discovery Today under a Creative Commons license.

Although artificial intelligence (AI) has had a profound impact on areas such as image recognition, comparable advances in drug discovery are rare. This article quantifies the stages of drug discovery in which improvements in the time taken, success rate or affordability will have the most profound overall impact on bringing new drugs to market. Changes in clinical success rates will have the most profound impact on improving success in drug discovery; in other words, the quality of decisions regarding which compound to take forward (and how to conduct clinical trials) are more important than speed or cost. Although current advances in AI focus on how to make a given compound, the question of which compound to make, using clinical efficacy and safety-related end points, has received significantly less attention. As a consequence, current proxy measures and available data cannot fully utilize the potential of AI in drug discovery, in particular when it comes to drug efficacy and safety in vivo. Thus, addressing the questions of which data to generate and which end points to model will be key to improving clinically relevant decision-making in the future.

Artificial intelligence in drug discovery: time for context

Many terms have been used to describe the application of algorithms in drug discovery, including computer-aided drug design (CADD, which often refers to more structure-based approaches); structure–activity relationship (SAR) analysis (which aims to relate changes in chemical structure to changes in activity); chem(o)informatics (extending SAR analyses to large compound sets, using different methods, and across different bioactivity classes); and, more recently, machine learning and artificial intelligence (AI). Although the terminology differs, what matters at the core is (i) which data are being analysed and (ii) which methods are used for this purpose. Largely methodological computational aspects are currently being focused on in the AI context in drug discovery (such as deep learning [1]), but one might argue that the question of which data are being used to achieve a goal comes logically first (and whether these data allow us, even in principle, to answer the question at hand). We will return to this topic later in this contribution, as well as the main focus in the companion article to this work [2].

CADD has at least 40 years of history (for a recent editorial providing historical context, see [3], and for a review, see [4]), with Fortune magazine proclaiming the ‘next Industrial Revolution’ in 1981 [5], and that drugs would be designed in the computer from now on (Fig. 1). The years approaching 2000, which culminated in the collapse of the stock market ‘biotech bubble’, saw a similar period of increased focus on computational methods for drug discovery. Although not all of the expectations of the time have been fulfilled, CADD is certainly routinely applied in pharmaceutical research today, covering a wide range of ligand- and structure-based techniques and approaches [2]. Also, when it comes to decision support systems for physicians in the medical domain, the New England Journal of Medicine proclaimed as early as 1970 (the year when the Beatles split up) that: “Computing science will probably exert its major effects by augmenting and, in some cases, largely replacing the intellectual functions of the physician” [5]. In this area, the landscape is quite possibly more fragmented than in the case of using CADD in early-stage drug discovery (owing to the nature of the underlying data generated and the multiple aspects of the parties involved in providing and funding healthcare, as well as the legal aspects of the confidentiality of patient data [7]). Still, both statements show that, in a given moment in time, it is not always possible to anticipate the potential of a current development realistically.

In 2007, one of the people involved in CADD from its infancy, John Van Drie, attempted an outlook of ‘the next 20 years’ in CADD [8], the majority of which has now passed. He expected seven major developments to happen during this timeframe:

(i) Computational thermodynamics will flower.

(ii) We’ll learn to turn potent ligands into drug candidates.

(iii) We’ll face new classes of drug targets which will challenge our competencies.

(iv) We’ll encounter novel molecular mechanisms for drug efficacy (e.g., self-assembling drugs).

(v) Rather than thinking about inhibiting a single target, we’ll learn to model an entire signal transduction pathway, and use that understanding to better select drug targets.

(vi) Today’s sophisticated CADD tools only in the hands of experts will be on the desktops of medicinal chemists tomorrow. The technology will disperse.

(vii) Virtual screening will become routine.

Much of this has indeed become reality. Regarding point (i), free energy perturbation (FEP) is applied productively to anticipate the affinity of compounds [9]. In terms of point (ii), in vivo pharmacokinetics (PK) and metabolism are still tricky to deal with today, but larger and larger in vivo data sets have become available (although often only inside pharmaceutical companies) that attempt to take ADME (absorption, distribution, metabolism and excretion) and PK properties into account earlier on 10, 11. We have learnt (but also still need to learn) to deal with new classes of drug targets (iii) and molecular mechanisms (iv), such as antisense oligonucleotides [12], gene therapy, antibodies [13] and PROTACs, which makes the current times very exciting. Modelling ‘entire signal transduction pathways’ (v) is often possible on a local basis for individual signal transduction cascades, but the full impact of these pathways on disease biology is still often difficult to ascertain, in particular as a result of pathway crosstalk. Bringing out CADD tools to medicinal chemists (vi) remains a challenge [14], quite possibly owing to differences in thinking and approaches across disciplines, but also as a result of practical considerations. The needs and requirements are different between experts and people who use a resource as a tool to solve a problem, as is the depth of understanding of the approach employed, and hence the need to be flexible in some cases and ‘fool-proof’ in others. (For a recent and insightful article on the interface of computational and medicinal chemistry, see [15]) To conclude the list, virtual screening (vii) is certainly a staple today [16], with a large number of free software implementations now available (although there is still some debate over the relative merits of different methods, and as we will see later, we still need to ask ourselves whether we model practically relevant end points in many cases). Many of John Van Drie’s predictions have now proven accurate.

What has emerged, however, is that chemistry can be handled computationally much better than biology can. The underlying principles of thermodynamics that in part determine the affinity of a ligand for a receptor are well defined (although in particular entropic contributions, the flexibility of a structure can still be difficult to handle in practice). But more complex biology, such as receptor conformational changes, equilibria and biased signalling, is much more difficult to understand already, and it only gets more difficult if one moves to events further downstream, such as changes in gene expression or protein modifications, especially when it comes to modelling changes spatially and over time).

This is still the Achilles heel of current computational drug-discovery efforts, and hence also poses problems when applying AI. We are able to describe chemistry rather well, and have a large amount of proxy assay data available for modelling, hence this type of data has been a key focus area of AI in the field in the recent past. However, a drug acts on a biological system, for which it is much more difficult to define finite a set of parameters, and hence we are also confronted with much more uncertainty over which experimental readout conveys a signal of relevance for efficacy or safety (for a good discussion of the topic, see [17]). A crucial aspect is the different underlying nature of chemical information (here defined as chemical structures) and biological information (genes and proteins, their interactions, and the resulting phenotypes at the cellular, organ and organism level), as briefly summarized in Table 1 (which is discussed further below, and more extensively in part two of this article [2]). In short, AI in drug discovery needs quantitative variables and labels that are meaningful, but we are often insufficiently able to determine which variables matter, to define them experimentally (and on a large enough scale) and to label the biology for AI to succeed on a level that is compatible with the current investment and hope in the area.

Table 1. Illustration of some of the fundamental differences of chemical information and biological information, both of which are essential for applying AI in the drug-discovery process

| Chemical information | Biological information | |

|---|---|---|

| Dimensionality and interdependency | High dimensionality (ca. 1063 small molecules are thought to represent generally plausible structures [18]); no interdependency between data points (molecules) | Medium to high dimensionality (ca. 23,000 genes to 1013 cells in the human body); even higher (and largely unknown) interdependency between data points (e.g., gene expression, protein interactions, etc. depend highly on context, such as cell type) |

| Current understanding of field | Good understanding of underlying physical principles (e.g., chemical reactivity, thermodynamics) | Limited: often unclear which type of biological information contains relevant signal for end point of interest, and in which biological background a given molecule/pathway has a direct connection to disease (e.g., [21]) |

| Sufficiency of data generated to describe the system | Chemical structure describes small molecule in its entirety (also crystal packing, etc. can be defined in detail); dynamic aspects of structure such as tautomeric states sometimes undefined | Unclear which type of information contains which type of signal, hence difficult to determine |

| Stability of system | Chemical structures need to be relatively stable to be synthesizable and used as a drug (although degradation can represent a problem in compound libraries [22]); analytical methods for structure determination (NMR, LCMS, etc.) are fast and cheap | Biological systems suffer from drift (e.g., cell line drift [23]), plasticity and high heterogeneity [24], which is difficult to define. This even leads to different responses of a cell line to the same drug in a high number of cases [24] |

| Data integration | Once the chemical structures are determined, their representation is invariant across experiments; bioactivity readouts measured across different assays or laboratories are often noisy 25, 26, which also can have an impact on model performance [27] | Biological readouts are highly dependent on the experimental system/assay used, and are thus often not reproducible. Correction of batch effects is needed (e.g., for the integration of single-cell RNA-seq data [28] or histopathology images). Also a need for normalization and integration of data types measured at different scales, resolutions, time points, etc. |

It should be added that here we focus on the utilization of data that can be used for decision making in drug-discovery projects, such as in the pharmaceutical industry. This remit should be discriminated clearly from data generation for more exploratory projects, in which the aim is to understand biological relationships through the profiling of biological systems using biochemical or high-throughput experiments such as DNA sequencing [18] or proteomics technologies (for understanding drivers of cancer, for example), and which often have yet to reach the point of actionable information. Both types of project require different data to be successful: drug discovery is based on solidly established relationships (be they of a statistical or mechanistic nature), whereas exploratory projects are often hypothesis-free and do not require actionable consequences, at least in the first instance. Still, making the step to generating solid hypotheses for decision making (that is, going from exploration to drug discovery) is not always done successfully in practice; biological data do not always lend themselves to the development of clear relationships in data sets, partly for the reasons outlined in Table 1.

In parallel with our improved understanding of chemical (versus biological) systems, advances in science have tended to primarily focus on larger numbers, instead of better quality (and relevance) of the data being generated. From the technological side, this has involved, for example, the development of combinatorial chemistry in the 1980s and high-throughput screening in the 1990s, which was lauded at the time (and which has certainly allowed us to do ‘more’, if so needed, and is useful for targeted screens in cases in which a validated target is well-defined and amenable to miniaturized screens) [29]. This, however, did not turn the tide with respect to new drug approvals to the extent that had been hoped for at the time, measured as the number of approved new drugs or the number of drugs with a new mode of action (first in class drugs) [30].

So did doing more help drug discovery in the past? Will doing more help us currently? As we will see later in this article (and in agreement with previous studies 31, 32), it is likely to be the quality of decisions that will allow drug discovery (as opposed to ligand discovery) to flourish in the future. Alas, our focus on chemistry (and the relative neglect of biology) and our preference for large numbers and proxy measures (as opposed to determining biological parameters that matter) is unlikely to provide the most solid basis for developing drugs in the computer, using AI, as we explain in more detail in the following.

We now discuss (i) whether speed, cost or the quality of transitions is most relevant for the success of drug discovery projects, followed by (ii) a discussion (by no means exhaustive) of proxy measures currently used in drug-discovery projects and their quality with respect to decision making. We follow this with (iii) a review of the current status of the field of AI in drug discovery and the extent to which it is able to address the main needs of the field [see point (i)] using available data [see point (ii)], with a particular focus on (iv) the quality of current validations available. We conclude with (v) an outlook on how to improve the current state of the art.

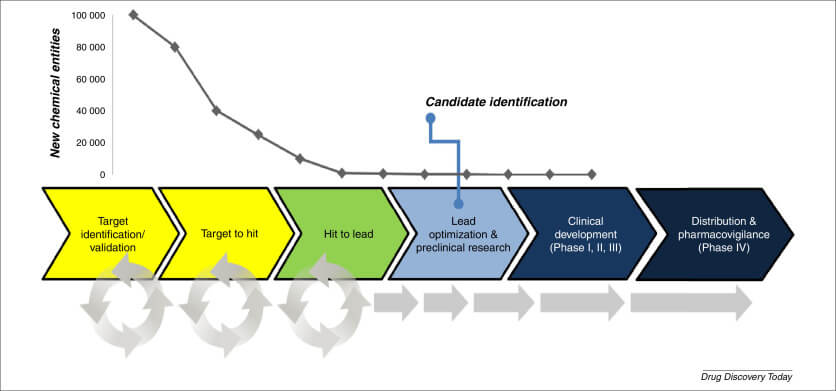

Quality is more important than speed and cost in drug discovery

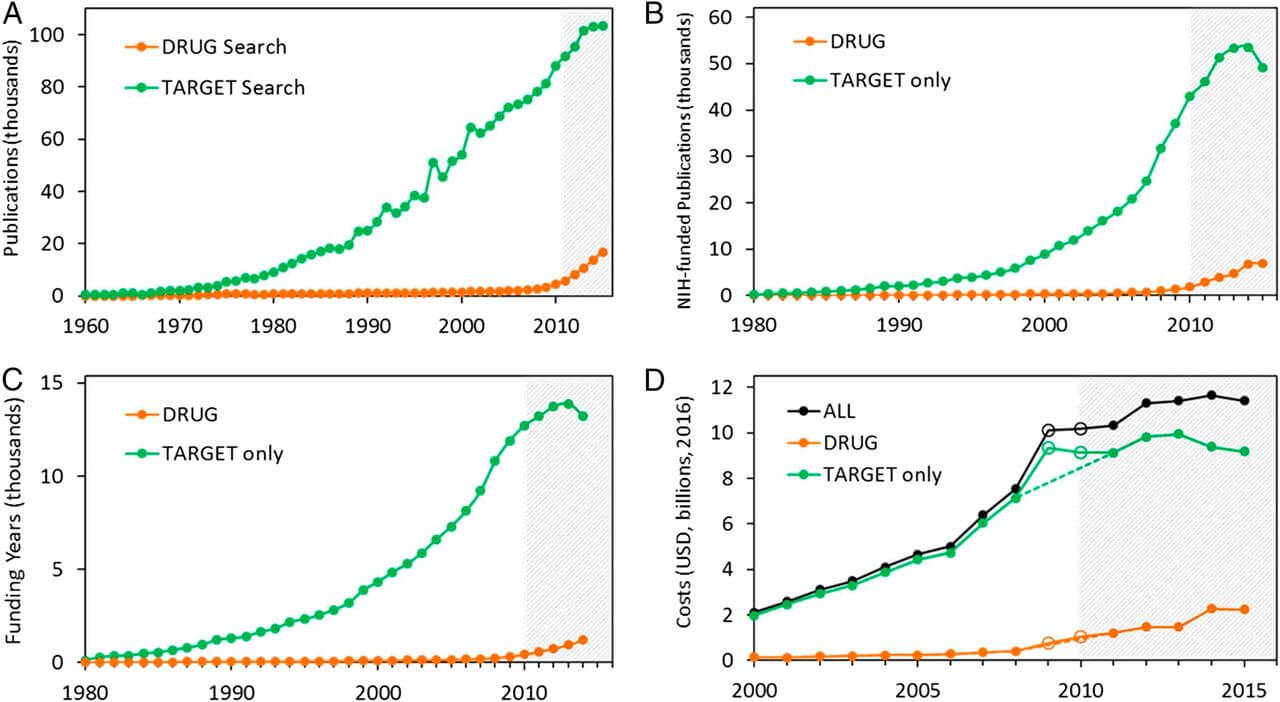

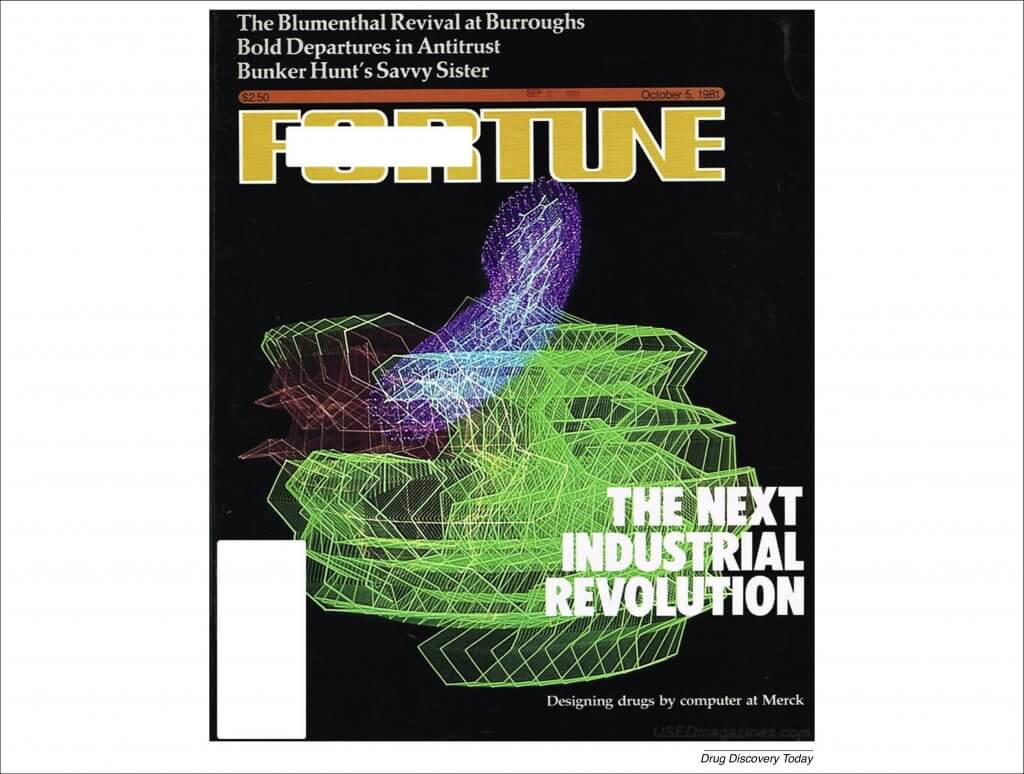

We first attempted to simulate the effect of (i) speeding up phases in the drug discovery process, (ii) making them cheaper and (iii) making individual phases more successful on the overall financial outcome of drug-discovery projects. In every case, an improvement of the respective measure (speed, cost and success of phase) of 20% (in the case of failure rate in relative terms) has been assumed to quantify effects on the capital cost of bringing one successful drug to the market. For the simulations, a patent lifetime of 20 years was assumed, with patent applications filed at the start of clinical Phase I, and the net effect of changes of speed, cost and quality of decisions on overall project return was calculated, assuming that projects, on average, are able to return their own cost. This simulation was based on numbers from [33], which assumed costs of capital of 11% (high compared to current times), but the general finding remains also with other costs for capital. (Studies such as [34], which posed the question of which changes are most efficient in terms of improving R&D productivity, returned similar results to those presented here, although we have quantified them in more detail.) For more recent and more detailed (but not fundamentally different) numbers on the success of drug discovery by phase and disease area, see [32].

It can be seen in Figure 2 that a reduction of the failure rate (in particular across all clinical phases) has by far the most significant impact on project value overall, multiple times that of a reduction of the cost of a particular phase or a decrease in the amount of time a particular phase takes. This effect is most profound in clinical Phase II, in agreement with previous studies [34], and it is a result of the relatively low success rate, long duration and high cost of the clinical phases. In other words, increasing the success of clinical phases decreases the number of expensive clinical trials needed to bring a drug to the market, and this decrease in the number of failures matters more than failing more quickly or more cheaply in terms of cost per successful, approved drug. Selecting better compounds throughout the discovery process has also recently been linked empirically to higher success rates in the clinic in a study at AstraZeneca [35] within the ‘5Rs’ framework, which refers to a careful consideration of the ‘right target, right tissue, right safety, right patient and right commercial potential’ of a candidate compound to be taken forward in discovery and development.

When translating this to drug-discovery programmes, this means that AI needs to support:

(i) better compounds going into clinical trials (related to the structure itself, but also including the right dosing/PK for suitable efficacy versus the safety/therapeutic index, in the desired target tissue);

(ii) better validated targets (to decrease the number of failures owing to efficacy, especially in clinical Phases II and III, which have a profound impact on overall project success and in which target validation is currently probably not yet where one would like it to be [36]);

(iii) better patient selection (e.g., using biomarkers) [32]; and

(iv) better conductance of trials (with respect to, e.g., patient recruitment and adherence) [37].

This finding is in line with previous research in the area cited already [34], as well as a study that compared the impact of the quality of decisions that can be made to the number of compounds that can be processed with a particular technique [31]. In this latter case, the authors found that: “when searching for rare positives (e.g., candidates that will successfully complete clinical development), changes in the predictive validity of screening and disease models that many people working in drug discovery would regard as small and/or unknowable (i.e., a 0.1 absolute change in correlation coefficient between model output and clinical outcomes in man) can offset large (e.g., tenfold, even 100-fold) changes in models’ brute-force efficiency.” Still, currently the main focus of AI in drug discovery, in many cases, seems to be on speed and cost, as opposed to the quality of decisions. This refers explicitly to the frequent utilization of proxy metrics, such as correlation coefficients or RMSEs of models instead of impact on project success, and proxy measures, such as activity on a target instead of readouts related to efficacy or safety. This is a consequence of the fact that AI and deep learning is by and large applied in early-stage drug discovery, when quality is often encoded by unidimensional metrics of activity and properties. How could quality be defined in the context of an AI model beyond R2 or RMSE values? For AI to show its value in drug-discovery projects, this focus possibly needs to develop further, so that the sole focus of models is not only on incrementally improving numerical values obtained for models of proxy measures of success (end points for which measured data are available). In short, what we model and how we establish success matters as much as how we model; however, the current focus of AI seems to be mostly on the latter.

We next look at how drug discovery is typically structured in many pharmaceutical companies, and why the quality of decisions made, using AI algorithms, is not trivial to change in this context.

The current analytical way of drug discovery using isolated mechanisms and targets

Drug discovery has developed over the years. In the time of James Black, often small series of hundreds of compounds were synthesized, tested and optimized in animals empirically (leading to drugs such as cimetidine and propranolol [38]). But with the advent of molecular biology, an increasing amount of effort has been made to understand the molecules that living systems are made of, and how they can be modulated to cure human disease. The human genome has been sequenced 39, 40 (at the cost of US$1 billion originally, although it can now be done for less than $1000 in one day), leading to our ability to annotate genes and proteins systematically. In the drug-discovery area, this has led to the discovery of, for example, receptors and receptor subtypes, and to the development of targeted therapies. Subsequently, in line with the analytical Western mindset, biology has been taken apart, and we have attempted to understand it at the cellular level on the basis of its individual components. A central dogma emerged: deficiencies in one (or more) of the components are the cause of disease, and hence the modulation of such components will allow us to cure disease in turn. The era of molecular biology and modern drug discovery via isolated model systems had begun. In some cases, such as targeted therapies, infectious diseases and hormonal therapies, this is an appropriate approach [41]. In other areas, however, owing to the connectivity of biology with feedback loops of a dynamic and often unknown nature (and that, importantly, are insufficiently formalized and quantified [42]), this has turned out to be too simplistic [41].

More recently, phenotypic screening has attempted to merge disease-relevant biology with large numbers of compounds that can be screened, and both approaches seem to be complementary and useful [43]. Phenotypic screening comprises a wide range of hypothesis-driven and hypothesis-free readouts, but it is not trivial to come up with a disease-relevant assay with a meaningful readout that is able to take full advantage of the phenotypic screening principle in practice [44]. In short, if phenotypic screens are hypothesis-driven (and targets of interest are identified beforehand) then the argument for a phenotypic screen is vastly decreased; and if a screen is truly phenotypic, then understanding the high-dimensional readout (without an underlying hypothesis) can be non-trivial. Target identification still can represent a problem [43], although a wide variety of powerful techniques for this step do exist [45]. Hence, phenotypic screens do incorporate some of the cellular complexity of biology, but they are still not able to (depending on the precise assay setup) recapitulate intracellular organ toxicity, PK, and so on to a reasonable extent. Hence, they only represent a gradual change from the analytical view on disease biology outlined here, although, without doubt, they can be useful in some situations.

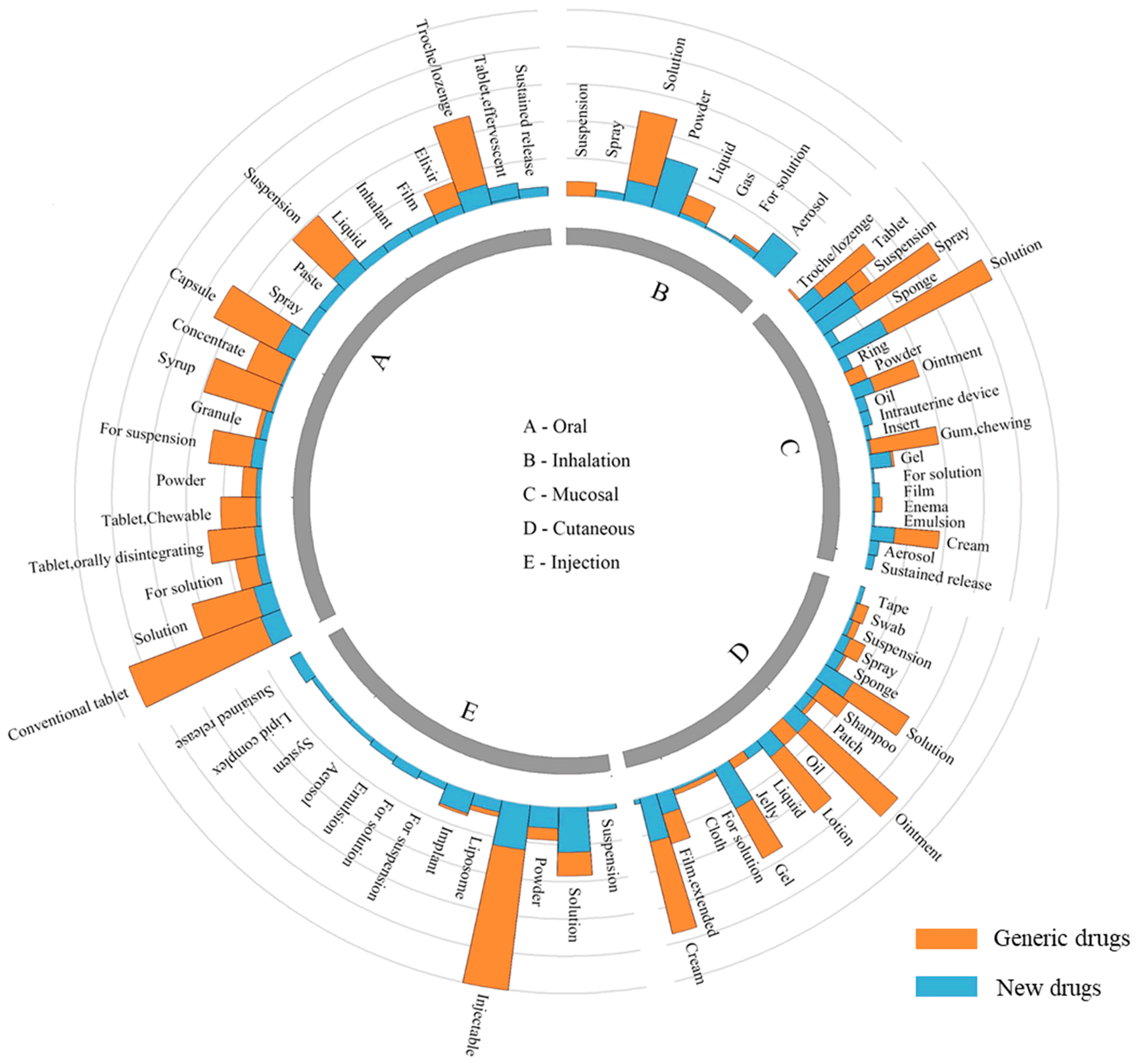

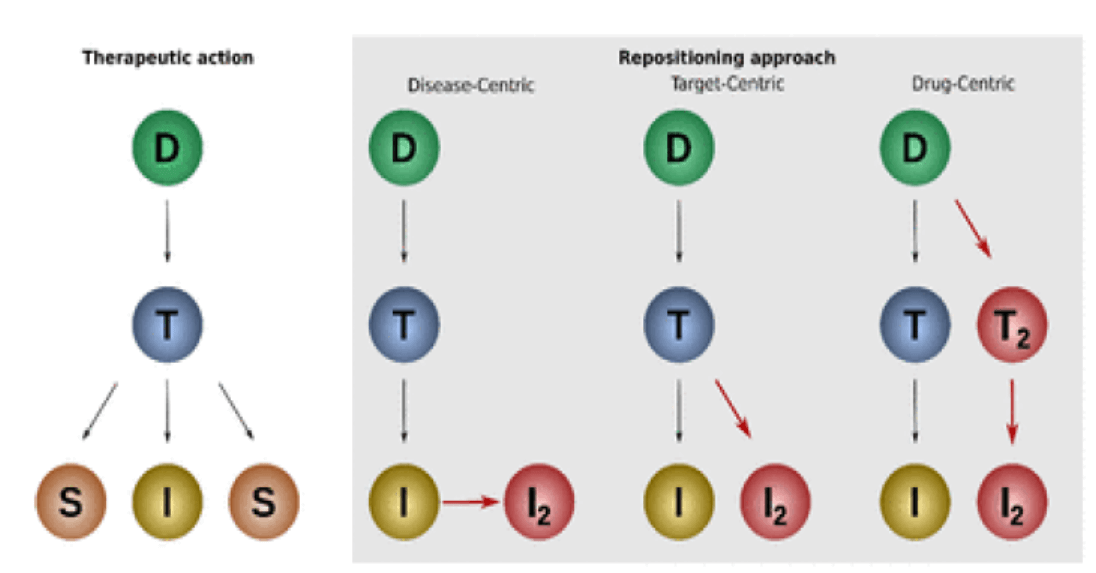

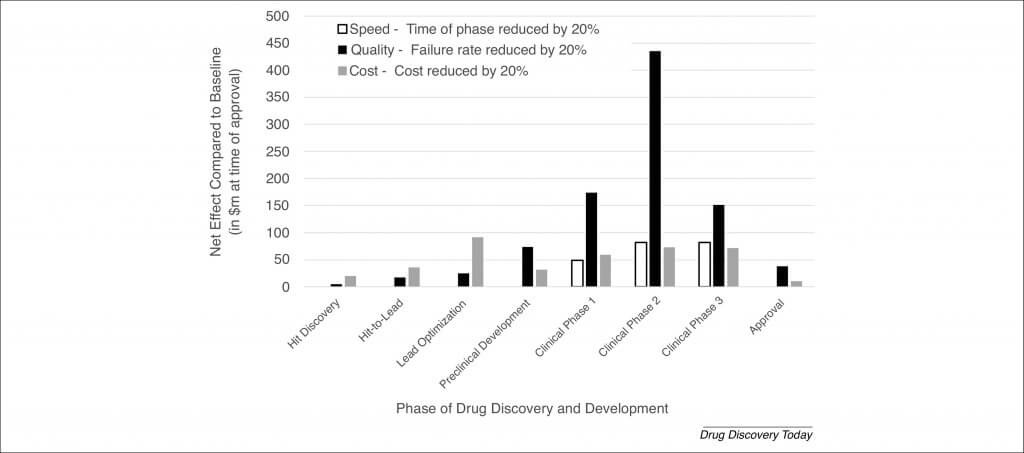

This reductionist view on drug discovery, which still forms the majority of current efforts to apply AI to the field, is illustrated in Figure 3. The way bioactive substances achieve their action in a biological system is shown at the top of the figure, whereas the analytical, modern way of drug discovery is shown at the bottom. It can be seen that for a compound to achieve an effect in a biological system, it must show activity on the target(s) of interest. But at the same time, its full ADME properties, activities against other proteins and even other factors such as its interactions with membranes, accumulation in organelles and changes in pH play a part in any observed activity. The basic principle of modern drug discovery is to identify a protein (or more generally a deficient or overactive biological mechanism) that does not function as needed in a diseased system, and then find modulators of this mechanism. But it does without initially paying attention to the whole spectrum of properties that a compound exhibits in vivo, including its parent compounds, its metabolites, concentration-dependent effects, and so on. The underlying principle is that this can be ‘done later’.

There are multiple conceptual problems with this approach, some of which are of crucial importance for approaches of AI in drug discovery, given that AI models also rarely consider the complexities of biology from the outset. The first problem is that this approach is plausible only in the case of monocausal diseases. Such cases certainly do exist: for example, in the case of viral infections in which a certain protease is required for replication or a receptor is required for cell entry. Also, target-based drug discovery has led to a significant number of approved drugs, and in particular to follow-up compounds when a system tends to be better understood. This approach has shown real impact [30]. However, only a minority of more-complex diseases fall into this category, leading to frequent failures in the clinic, in particular as a result of poor efficacy. The second problem is that achieving activity in a model system, such as against isolated proteins, neglects the question of whether the compound reaches its intended target site (taking its PK profile into account [10]), whether it is able to revert the diseased phenotype and, if so, whether it achieves this goal with tolerable side effects. Selecting chemical matter for only one objective, usually activity against the primary target, and relegating all other aspects of compound action for later has caused practical problems in drug discovery in the recent past. Also, attempting to take into account other compound properties via simple model-system measurements is unlikely to achieve the goal as desired. As shown above, the quality of decisions is key, and is more important than cost and speed; optimizing compounds in only partially relevant and predictive proxy space is unlikely to allow AI systems to make decisions with the required quality.

Although the aspects mentioned in the previous paragraph are common to reductionist drug-discovery efforts, the key aspect is that many current approaches of AI in the field aim to transfer methods from image or speech recognition (such as different types of neural networks), with the aim of improving the prediction of a particular molecular-property end point quantitatively. In many cases, numerical performance improvements of the models can be achieved [46], but the relevance of this particular end point for in vivo efficacy and safety is often neglected. Will an improved prediction of logD, solubility or mutagenicity in an AMES assay by a few percentage points really be a game-changer for drug discovery when it comes to safety and efficacy in the in vivo situation?

An example of how difficult it is to have clear compound–target–effect links, which would be required for the approach shown in Figure 3 to succeed, is represented in Box 1 for ketamine, based on an excellent recent article on this compound [47]. Ketamine has proven useful for various purposes since 1970; however, its indications, as well as its modes of action (and whether its efficacy is linked to the parent compound or its metabolites, see Box 1), are still poorly understood. The inability to assign clear links between chemical entities, proteins and modes of action on the one hand and effects on the other poses challenges for the current application of AI in drug discovery. The data we deal with are multi-dimensional and heavily conditional, an aspect we describe in more detail in the second part of this article [2].

Box 1

Understanding indications, modes of action and the impact of PK is not trivial: the many facets of ketamine (based on [47])Ketamine is used as both an anaesthetic, which has been approved since 1970, and a street drug. However, in 2000 it was found to work as an antidepressant at doses significantly lower than when it is used as an anaesthetic [49]; and in addition, its bronchodilatory properties are well known. Although ketamine has long been thought to act by blocking the NMDA (N-methyl-d-aspartate) receptor, other NMDA blockers such as memantine and lanicemine have not been successful in clinical trials, hinting at a difference in their respective modes of action that is not fully understood. More recently, the opioid system has also been implicated in the action of ketamine, given that naltrexone (which acts on the opioid system) has been shown to influence the effect of ketamine [50] (although another study found the opposite [51]). Furthermore, a metabolite of ketamine has recently been found to be active in animal models of depression [52], with human studies being still required.

This case illustrates the difficulty of annotating drugs with modes of action and indications, rendering the application of AI approaches to such poorly labelled data non-trivial.

Phenotypic screening 43, 44, which is more of a spectrum of approaches than a ‘black and white’ distinction from target-based approaches, could potentially bridge the complexity of self-regulating biological systems and the immense practical convenience of target-based drug discovery. Recent developments in finer disease endotyping, such as in asthma [48], could also help to bridge the gap. And given sufficient understanding of a disease, target-based drug discovery certainly has its place, especially when molecular mechanisms can be specifically targeted to ameliorate disease on an individual basis. However, in our opinion, it seems that in the context of AI approaches in drug discovery, the limitations of this are in some cases not fully realized when applications and case studies are presented.

Target-based, reductionist approaches in drug discovery have led in recent decades to the development of high-throughput techniques for proxy end points that are quick and cheap to measure, and in cases in which the target was sufficiently ‘validated’, to many successful drugs. These techniques have generated large data sets with proxy data, such as for physicochemical endpoints and on-target activities. And because these are the data we have, current approaches of AI in drug discovery often aim to model such proxy end points, the relevance of which for drug discovery is at best only clear when looking at them in the context of all other properties and when recognizing their limitations, such as in a given biological disease context. These limitations of proxy measures, which are the main focus of current AI approaches in the field, are what we focus on next.

Chemical and biological properties of relevance for drug discovery and the extent to which they are captured in current data

In this section, we briefly review proxy measures used in drug-discovery projects, as taken in particular from a recent publication [53], and comment on their suitability for decision making with respect to the in vivo situation. Table 2 represents a ‘typical’, and reasonably representative, set of properties used during a drug-discovery project for early-stage decision making (although note that it is not meant to be exhaustive or definite in any way). The list of properties is accompanied by comments on individual end points with respect to their ability to make qualitatively good predictions as to the suitability of particular compounds for future use as drugs.

Table 2. Properties relevant for drug discovery and information captured in assays used on a large scale for data generationa

| Assays | Comments | Useful as predictor for human in vivo situation? |

|---|---|---|

| On-target activity as a proxy for efficacy (and off-target activity as a proxy for adverse events) | Target validation difficult; weak links between individual targets and phenotypic effects (both related to efficacy and toxicity) | Target engagement in vivo is frequently sufficient for causing phenotypic effects; however, activity on a target in isolated protein or cellular assays often differs from the situation in vivo owing to, e.g., pharmacokinetic properties, so both generally need to be considered in combination |

| Physicochemical properties (e.g., solubility, lipophilicity) | Relevant for almost any aspect of drug development owing to the application of drugs in largely aqueous biological systems; in particular in the case of oral dosing (to ensure bioavailability) | Crucial especially for orally administered drugs; broad correlations with many drug properties, especially in ADME space (bioavailability, promiscuity, distribution, metabolism, etc.); however, needs to be considered in combination with other compound properties to be relevant |

| PK end points (e.g., microsomal stability, CYP inhibition, Caco-2 permeability) | Generally simplified cellular systems to anticipate uptake, metabolism and transport of compounds across body compartments. Represent simplified version of respective tissue or organ system | Organs and tissues are considerably more complex and heterogenous systems than simplified cellular systems. Heterogeneity of different cell types in a tissue or organ, adaptive responses (such as changes in expression), and aspects such as the impact of the microbiome [58] on the in vivo situation are generally neglected |

| Cellular toxicity | Human HepG2 cells can act as a surrogate for effects of toxicity on human liver, an important cause of drug failure in the clinic, and cytotoxicity is often an early-stage marker for adverse effects of compounds (in combination with other parameters), etc. | Heterogeneity of organs and tissues generally not considered, and responses of isolated cells or cell lines often differ from those of more complex systems |

| Heterogeneous cell cultures/3D models | Heterogeneous cell cultures generally resemble organ systems and their behaviour better than individual cells/2D cultures [59] | Generally more representative of the in vivo situation, but more difficult to handle and only a relatively recent development, so generally no large data sets are currently available |

| Toxicity/safety | Many proxy end points exist for various aspects of compound safety; however, they generally focus on individual readouts (as opposed to true organ-based toxicity as an end point) [60] | Safety can be interpreted as the therapeutic index, which quantifies the difference in exposure between efficacy and safety for a drug. However, this requires the ability to estimate organ-based toxicity in a quantitative way (considering compound PK), which is generally not easily done today |

| Animal models: efficacy | Animal models have sometimes been seen as a ‘gold standard’ for efficacy testing, but their representativeness and predictivity for both efficacy [61] (e.g., in PDX models [62]) and safety in humans is not necessarily a given and depends on the precise case [63] | Depends heavily on the case (disease, choice of animal model, etc.). Some organ systems and readouts translate much better between animals and humans than others [63]. General trend to avoid animal research where possible, owing to cost, ethical reasons and generally suboptimal predictivity for the human situation |

a. It can be seen that many of the readouts generated on a large scale often do not possess sufficient relevance for making decisions for an in vivo situation; they have rather been chosen as a compromise of relevance, speed and cost. Adapted and expanded based on [53].

It can be seen that, overall, many of the end points have only intermediate predictivity for in vivo end points. This is, to an extent, the result of favouring high-throughput measurements over less-scalable measurements with direct in vivo relevance, which practically would have been difficult to achieve. Possibly surprisingly, low predictivity for the human in vivo situation is also often the case for animal models, in terms of both efficacy and safety (although in part this might be due to the study design [54]). Predictions of efficacy depend heavily on the individual animal model used [54]; however, in the area of adverse events, the presence of an adverse event in an animal is generally predictive of an adverse event in humans (i.e., toxicity is easier to extrapolate between species than safety) [55]. A recent meta-review of 121 studies also concluded a wide range of predictivities of animal models for the human case [63], and cautioned that there might also well be a time element to the data used (i.e., that more recently there was an increased focus on the aspect of translatability to humans).

In recent years, there has been a trend towards more clinically relevant model systems, some of which are also patient specific. At a simpler level, this includes heterogenous cell systems for higher-throughput testing, as well as considering parameters such as cell–cell interactions in 3D [64] and permeability. At a more complex level, it includes patient-derived test systems, such as patient-derived xenograft (PDX) models in cancer 52, 65. These types of system might have a significant impact on drug discovery in the future; however, at the current stage, there is relatively little data available that can be used in an AI context for mining. Another problem is that the more patient-specific a system becomes, the less it generalizes, which requires the generation of large enough amount of data to become practically useful.

An additional layer of complexity when using data for decision making is their often non-binary nature, in particular when considering in vivo readouts, for example from histopathology. Assigning observations of significance to a compound depends, besides the structure itself, on exposure, subjective interpretation of observations (although this can be made more objective using image-recognition techniques [57]), the mutual dependence of end points (one end point has a different meaning in the context of the presence or absence of another end point), sufficient sampling and subjective use of terminology, among other points. All of this renders this type of data non-trivial to use for AI decision making, owing to its complexity.

To conclude this section, although we have seen before that there is a need to make decisions with high quality, the data that have been generated in high-throughput systems in recent decades can only be used to make this type of decision in some cases, as a result of the proxy nature of the data. The generation of high-throughput proxy data has been driven partly by its practical relevance, but also to some extent by the low cost and high speed of generating such measurements. The need to make decisions with sufficient quality is only compatible in some cases with the data we have at hand to reach this goal. If we want to advance drug discovery, then acknowledging the suitability of a given end point to answer a given question is at least as important as modelling a particular end point, as judged by a numerical performance measure.

Current landscape: is it AI in drug discovery or in ligand discovery? What do we need to advance further?

Here, we briefly outline where AI approaches have been used for drug discovery in the recent past. Given the area has grown tremendously, we provide only a few spotlights on the burgeoning field.

Starting from new chemical matter, the area of de novo design [66], in which novel structures with desired properties are devised computationally, has received significant attention. So have the related areas of forward prediction and retrosynthesis prediction [67], which aim to identify how chemical matter, once identified as being worth of experimental investigation, can be synthesized. (Retrosynthesis prediction refers to the identification of efficient synthesis routes given a target compound, whereas forward prediction deals with the prediction of the outcome product of a reaction given a set of reagents. Both are related, but still need to address somewhat different challenges in a practical implementation.) Once a ligand is in place, the logical next step is discerning whether it binds to a particular protein target; here, docking (and related approaches) and in silico target prediction have been active areas of interest for decades, starting with PASS [68] and related approaches 69, 70, 71. This area has recently been revived significantly, leading to larger-scale comparisons [72] and the application of methods such as deep learning 72, 73 and matrix factorization 74, 75, as well as the inclusion of additional types of information such as cell morphology readouts 74, 75. These are the chemical stages of using AI in drug discovery, which have been the main areas of research in the recent past. For the most part, these are areas in which the data are labelled sufficiently well for data mining, and hence computational analyses can have a significant impact on anticipating ligand–protein interactions. Generally speaking, approaches such as deep learning have had a somewhat positive impact on increasing numerical measures of performance (often marginally) when it comes to predicting ligand–protein interactions 72, 73, 76. However, particular attention needs to be paid to model performance measures in this context [77].

But the connection from a particular mode of action to a given phenotypic effect, such as curing a disease, needs to be in place for a compound to exhibit the desired effect in vivo. The fact that genetic support is, generally, correlated with increased likelihood of drug success illustrates this point [78]. Here, the area of target identification has been active for many years, with approaches such as genome-wide association studies [79], functional genomics [80] and, more recently, the development of techniques such as CRISPR [81]. However, in many cases the relationships unearthed are less clear than one would hope for, and hence less actionable from the drug discovery perspective. This can be attributable to confounding factors, as well as complex genotype–phenotype relationships involving multiple genes, environmental factors, and so on [79]. In addition, it needs to be kept in mind that identifying that a particular gene or protein that is involved in causing a disease does not mean that one is able to cure the disease by targeting it with a small molecule (or a particular other technique) using current techniques. One reason for this could be an inability to activate inactivated proteins owing to mutations. Another obstacle could be the difference between ligand–protein interactions in the functional domain (targeting a single binding site versus removing all of the interactions of a protein) and the temporal domain (inducing a temporary inhibitor effect versus a permanent knockdown), which is of significance for resistance in cancer therapy, for example [82]. So although there has been significant interest in identifying disease drivers, this does not, by itself, offer an easy path to identifying a target that could be modulated to cure the disease (except in simple cases).

Integrating both ligand–protein activity and target identification along with the PK properties of the compound in an integrated manner is what is currently still missing when it comes to AI in drug discovery. This would involve: (i) modelling the interactions of a small molecule with all of its interaction partners; (ii) addressing the question of target expression in the disease tissue and its involvement of disease modulation; (iii) including the PK behaviour of a molecule with respect to the in vivo system in the analysis 10, 11; and (iv) considering safety in parallel with efficacy from the onset. This is the aim of quantitative systems pharmacology (QSP) [83], in which significant effort has been made in recent years, but our understanding of certain efficacy- and safety-related processes still needs to be improved to base practical decisions on such models.

As opposed to this integrated view on drug discovery (and applications of AI within it), the current situation is rather more fragmented. AI is probably able to design ligands for proteins and to lead to easier synthesis, so it does enable us to discover ligands. This step is worthwhile in itself: in cases in which we have a protein we want to find a ligand for, we are now more able to do so. In this direction, technical advances such as those by IBM [84] and others have been published [85], and multi-objective optimization for end points has been around for a number of years [86]. But this question is rather different from being able to design a drug that is efficacious and safe in vivo. This represents a problem, given what we have discussed earlier in this article. We have seen that we need to make better-quality decisions on which compound to advance; however, much of the proxy data we have only provides limited value for making such decisions in practice. Hence, what should those decisions be made on? And, given the data currently available, can AI improve decision making in that space, and if so, to what extent? These more fundamental questions need to be addressed to apply AI in drug discovery in a truly meaningful way.

AI in drug discovery might have some fundamental shortcomings in its current state, but it has only been able to advance to where it is today as a result of the contribution of aspects of human psychology, some of which are summarized in Box 2. Given that the future opportunities of a particular technique can be difficult to define, the blanks often get filled in with one’s own beliefs, thereby giving a subjective approach to the supposedly objective field of science, and to the application of AI in drug discovery in particular (see [17] for related discussions).

Box 2

The bigger picture: AI in drug discovery, embedded in human psychology and society

There are several societal and psychological drivers that can preclude a realistic assessment of methods that are currently en vogue. Many of these also apply to the field of AI in drug discovery, and include the following:

● Hype brings you money and fame (and realism is boring).

● FOMO (fear of missing out) and ‘beliefs’ often drive decisions in the absence of objective criteria on how to judge a method.

● ‘Everyone needs a winner’ (‘after investing X million we need to show success’).

● Selective reporting of successes leads to everyone declaring victory (but in reality no one is able to evaluate the relative merits of methods).

● Difficulty to really ‘advance a field’ with little real benchmarking taking place.

● Subjective attribution of success to a method (in which multiple factors are at play).

● The dynamics of science: a paper in a ‘high impact’ journal gives you a grant, and a grant gives you a ‘high impact’ publication (at the detriment of novel developments, and those by not yet established researchers or those not from particular sets of institutions).

● Belief that technology and modelling is what drives a field forward (as opposed to understanding and insights).

● Disconnect between academic work and novel fundamental discoveries and practical applications.

Validation of AI models in drug discovery, competitions and data sharing

Given the aim of making better decisions using AI, the question arises of how to evaluate beforehand which decision support systems are better at this than others. Unfortunately, progress in this area suffers from a number of shortcomings: some of them are specific to the field, whereas others are a result of the way scientific progress, communication and funding is currently structured. In the following, we focus on conceptual problems of validation of AI methods in drug discovery, some of which are also mentioned in [3] and [15]. For a recent discussion of the use and misuse of different numerical performance measures of models, see [77].

First, and possibly most importantly, much of the current efforts of AI in drug discovery are focused on ligand discovery, and this can certainly help in validating a target with respect to its ability to recover the diseased phenotype. However, in the context of drug discovery, a ligand is not (yet) a drug. Hence, if AI approaches for drug discovery only end up generating a ligand for a protein, then there is no evidence that this will help drug discovery as a whole, which hence is an important aim for the future. To validate AI systems in drug discovery, we need to move to more complex biological systems (and the clinic) earlier, and more often. At the computational level, this means including more predictive end points in models, related to both efficacy and safety. This might well involve the need for generating new data, and these data might be more complex than simply consisting of single end points (which are computationally easier to optimize for).

Second, there are often no control experiments being conducted when AI delivers new compounds (such as no baseline methods being applied in parallel). Given that a drug coming to market is a long series of choices, it is hence often impossible to disentangle whether the end product is a result of the method applied or the result of subjective choices on which compound to test. One is then tempted to ascribe a positive outcome to the part one is most interested in.

Third, given that there is a large chemical space from which to pick possible validation compounds, and given that there is a desire to show that a new system succeeds at its goals, this tends to lead to trivial validation examples. This results in a focus on trivial wins instead of a real validation of methods that address the need to access novel chemical and mechanistic space, and hence allow us to treat diseases in novel ways.

Fourth, method validation in the chemical domain is tremendously difficult because one does not know the underlying distribution of chemical space. In other words, one is never able to truly prospectively test models, except with a very large prospective experiment, including controls. This is a systematic shortcoming that will be conceptually difficult to overcome and that retrospective validation can only able address if one assumes that the predictions that will be made in the future will resemble those made for validation. (This is not the case, when, for example, aiming to explore novel chemical space, and hence defeats the purpose of these models.)

Fifth, and connected to the previous point, the performance reported and the data are related. The data used for validation are usually not sufficiently characterized to put the performance into the context of the underlying data distribution that a given performance was obtained for. The numbers alone are hence meaningless and not suitable for comparing models in any way.

Sixth, and connected to the preceding two points, comparative benchmarking data sets are retrospective, but because (i) they are limited in size compared with the chemical space and (ii) we do not know the underlying distributions in either the entire chemical space or in the part of it that we will be interested in in the future, we will never be able to have a true estimate of model performance from those comparisons. In addition, although retrospective data sets allow us to compare the performance of methods numerically, the desire to perform well on a benchmark makes this set-up prone to overfitting of models, because there is no true, prospective data set present. This results in still unknown performance for a real-world prospective situation. Recently, some novel approaches have been published [87] that explicitly aim to address the difference between evaluating model performance on the ‘re-discovery’ of data points obtained already, compared with the discovery of something truly new, and to provide a finer analysis of the applicability domain of models [88]. This is a valuable direction of research, which in the works cited mostly relates to the domain of materials discovery. Still, in the field of drug discovery, success in a project takes a long time to be established, and it is the result of a long list of choices, so linking this to a particular computational model is intrinsically difficult. The question of which end points to validate a model on (i.e., which are practical for an in vivo situation) remains as well.

Furthermore, on the practical side, as more broadly in the life sciences, replication problems have been associated with applying AI methods in the medical field 89, 90, 91, and algorithms have been applied in the medical domain without taking the peculiarities of that field into account [92]. The latter can lead to the development of models that are biased [93], that learn spurious associations in the data or that are not able to handle biological drift appropriately [26]. Given that important decisions in the medical field can be based on such models, it is important to address such shortcomings before wider deployment; and wider deployment will also require the generalization of models [94]. But in earlier-stage discovery, in particular when biological readouts are part of model development (as they should be), it is important to understand the difficulty in capturing and representing such data to learn from it, an aspect that is addressed in more detail in the second part of this work [2].

Competitions represent one way in which method comparison has been attempted (e.g., the CAMDA [95] and DREAM [96] challenges). One might argue that this is a fair way of comparing approaches, given that the same training data sets are available to all participants, that test data is (or at least can be) blinded and that consistent model scoring metrics are applied. However, competitions, especially in the area of drug discovery, are not trivial to perform. Data sets of sufficient size with relevant end-point annotations are not frequently available publicly (compared with other areas, such as image recognition, data-set sizes often are many orders of magnitude smaller). In addition, end points and performance measures used in competitions often do not have direct relevance for the in vivo situation; rather, they are proxy measures (e.g., of the types discussed above) that are easy to evaluate and score. Aside from this, there is the human tendency to maximise the performance of models on a particular data set used during the competition; however, a model that attains a high-scoring metric on a particular annotated end point is not necessarily the most practically useful one (i.e., it might not be so successful with novel data or with decisions that are relevant for drug discovery in practice). Models that are generated as the result of competitions are laudable in that they technically explore what is possible in the given context of data and performance metrics applied, but the competition format means that such models do not tend to extrapolate well to real-world drug discovery projects, owing to the competition’s limited data and choices of end points, and the lack of assessment of the true prospective translation of methods. Many current data analyses seem to deliver very similar results 77, 97, 98 in the end; therefore it is unlikely that the future of AI in drug discovery lies in the development of the right analysis method, but rather in asking the right question (and hence modelling the right end point) in the first place.

To change the current situation of a scarcity of relevant data end points, consortia are likely to play a part in the future: not only those for data sharing (e.g., the Innovative Medicines Initiative projects [99]), but also those for data generation, including the coverage of sufficient chemical as well as biological space. However, it needs to be acknowledged that a certain conflict exists between the desire to advance methods generally on the one hand and data confidentiality and having an advantage over competitors on the other. Just compiling the existing data (which might be generated in different formats, use inconsistent annotations and lack overall experimental design) is unlikely to fulfil the current needs for AI in drug discovery to truly succeed in terms of having the right types of data. If the parts of chemical space or biological readout space that are needed to answer a question are not contained in what is available, algorithms will not be able to fill in those gaps. And here, not just any data will do. To quote Sydney Brenner: “Indeed, there are some who think that all that will be required is the collection of more and more data under many different experimental conditions and then the right computer program will be found to tell us what is going on in cells. […] this approach is bound to fail […].” [100]. The data need to contain a signal to answer the concrete questions we ask from them, which means data supported by understanding and solid hypotheses.

Improving on the current state of the art of using AI in drug discovery

As we have seen above, to fully utilize AI in drug discovery, we need to improve the quality of decisions we make with respect to compounds taken forward into the clinic. But the data available to make those decisions are, in many cases, not entirely suitable for this end. What does this mean in practice? In which areas do we need to focus our effort to advance the current state of the art?

We need better compounds going into clinical trials, including the right dosing/PK for achieving a safe therapeutic index, which involves selection via efficacy and safety-relevant end points (not just by using proxy measures that are easy to generate but not informative about these aspects). In this category, 3D models might be helpful, which often have better predictivity than cell lines [59]; and in later stages, truly predictive animal models could be used for safety and toxicity end points [63]. Apart from choosing the right compound, the related question of choosing the right dose has often come down to trial and error in practice. However, with the arrival of more in vivo relevant end-point data, such as in case of rat PK models [11], it is becoming more possible to model quantitative aspects of efficacy and safety more directly, and quite possibly better, than with other approaches based on proxy measures, in particular with the increasing amounts of data available. (However, the coverage of chemical space, or the relevance for particular tissues [101] or subcellular compartments [102], remain areas in which further development is needed in the PK area.) From these types of model we could obtain more meaningful data, which can then be employed by AI algorithms in a more effective manner than data from proxy end points with less relevance.

The question of which compound to advance is related to the question of its mode of action in vivo, so we need better validated targets or, more generally, better validated modes of actions of compounds [36], as described above. This distinction between targets and modes of action might turn out to be rather important in cases in which multiple (or non-specific) compound interactions are related to efficacy, and also in cases in which single targets that have been identified as drivers of disease cannot be found or cannot be utilized for efficient reversal of the disease state. It has been shown that genetically validated targets in many cases do have a higher likelihood of clinical success 78, 103. But owing to intricacies with statistics and the complexity of biology as a homeostatic system, it is likely that in future it might not be so trivial to identify single drivers of disease. Therefore, a more general mode-of-action view might be warranted, particularly in the context of understanding a compound’s action for a given indication, and also in areas such as repurposing. Informed target/mode-of-action selection can have a profound impact on decreasing the number of failures attributable to efficacy, especially in clinical Phases II and III, which have a profound impact on overall project success. Here, the impact on improved uses of AI in drug discovery can come from a more integrated view on understanding the links between genes and proteins and disease on the one hand and the impact of the modulation of such targets on disease on the other. However, the field needs to evolve beyond ‘target–disease’ links and give more consideration to quantitative and conditional aspects.

Better patient selection (via biomarkers) is likely to increase the chance of clinical success in the future, in relation to both efficacy and safety end points. Recent studies [32] have found that clinical trials using biomarkers roughly double the probability of success of a project, from about 5.5% to 10.3%. Addressing this challenge will simultaneously evolve our understanding of disease and improve our annotations of biological data for the utilization of AI methods. However, it needs to be kept in mind that deriving biomarkers from, for example, gene expression data that are predictive for in vivo end points is not a trivial exercise.

Finally, we need to conduct trials more efficiently in terms of, for example, patient recruitment and adherence, which can be supported by computational methods [37]. In many cases, this simply involves computational matching algorithms that have been relabelled as ‘AI’ approaches; such algorithms can often successfully match patients to clinical trials and monitor adherence to treatment regimens [37]. The use of the term of ‘AI’ seems to be in some cases a result of the mindset of the current times; however, this is only terminology, and it seems that clinical studies can be improved by better treatment–patient matching protocols.

When it comes to data supporting the early stages of drug discovery, we need to realize that the data we currently have are unlikely to give us the answer needed to judge the efficacy and safety of a compound, which we discuss in detail in the second part of this review. Only understanding biological end points of relevance will allow us to generate the data needed for quality-based decision making, which is required to make drug discovery overall more successful. This might, where possible, come from disentangling the role of individual targets in a disease. In other areas, it might involve staying on a descriptive biological-readout level (using cellular and gene expression data, etc.) for drug–disease matching or repurposing. We need to understand better what works, and where, to know which data to generate for a particular question related to efficacy and safety. When available on a sufficient scale, these data can then be applied to decision making, using machine learning and AI methods. Once we have reached this stage, AI in drug discovery will be elevated to a whole different level from where we are now.

Conclusions

Although the area of AI in drug discovery has received much attention recently, this article has attempted to illustrate that with our current ways of generating and utilizing data, we are unlikely to achieve the significantly better decisions that are required to make drug discovery more successful. The key reason for this is the use of proxy data at many stages of decision making, which is the core type of data available on a large scale for computational models, and which is unlikely to address the need for making better-quality decisions for the in vivo situation, in particular in the case of more complex diseases. Although chemical data are available on a large scale, and have been successfully used for ligand design and synthesis, these data mostly relate to ligand discovery. This can be very useful for target validation, for example, but further steps are needed to make full use of AI in drug, rather than ligand, discovery. To truly advance the field, we need to understand the biology better and generate data that contain a signal of interest in a hypothesis-driven manner, related both to efficacy and safety end points. In other words, we need to advance better candidates into the clinic, validate targets better, improve patient recruitment and advance how clinical trials are conducted. All of these aspects require the generation and utilization of data that reflect biological aspects of drug discovery more appropriately. This is likely to involve the generation of novel data with more meaningful end points, which are in many cases likely to be high-dimensional in nature. Once these data are available, we can apply AI methods. However, this goal is less likely to be achieved with much of the proxy data that have been generated up to this point, at least not if the proxy end points are insufficiently understood in the context of the biological context. As discussed in the second part of this review [2], we need to understand what to measure, and how to measure it, to be able to predict drug efficacy and safety with the desired quality, and then with decreased cost and increased speed. Only once these data are available for AI approaches can the field be expected to make real progress.

Acknowledgements

Claus Bendtsen (AstraZeneca) provided valuable feedback on an earlier version of this manuscript.

References

- Y. LeCun, et al. Deep learning Nature, 521 (2015), pp. 436-444

- Bender, A. and Cortes-Ciriano, I. Artificial intelligence in drug discovery: what is realistic, what are illusions? Part 2: A discussion of chemical and biological data used for AI in drug discovery. Drug Discov. Today (in press).

- N. Brown, et al. Artificial intelligence in chemistry and drug design J. Comput. Aid. Mol. Des, 34 (2020), pp. 709-715

- G. Sliwoski, et al. Computational methods in drug discovery Pharmacol. Rev., 66 (2014), pp. 334-395

- W.B. Schwartz Medicine and the computer. The promise and problems of change N. Engl. J. Med., 283 (1970), pp. 1257-1264

- M. Bartusiak Designing drugs with computers Fortune (1981) 5 October

- K.-H. Yu, et al. Artificial intelligence in healthcare Nat. Biomed. Eng., 2 (2018), pp. 719-731

- J.H. Van Drie Computer-aided drug design: the next 20 years J. Comput. Aid. Mol. Des., 21 (2007), pp. 591-601

- L Wang, et al. Accurate and reliable prediction of relative ligand binding potency in prospective drug discovery by way of a modern free-energy calculation protocol and force field J. Am. Chem. Soc., 137 (2015), pp. 2695-2703

- M. Davies, et al. Improving the accuracy of predicted human pharmacokinetics: lessons learned from the AstraZeneca drug pipeline over two decades Trends Pharmacol. Sci., 41 (2020), pp. 390-408

- S. Schneckener, et al. Prediction of oral bioavailability in rats: transferring insights from in vitro correlations to (deep) machine learning models using in silico model outputs and chemical structure parameters J. Chem. Inf. Model., 59 (2019), pp. 4893-4905

- J. Kim, et al. Patient-customized oligonucleotide therapy for a rare genetic disease

N. Engl. J. Med., 381 (2019), pp. 1644-1652 - Van Allen, E.M. et al. Genomic correlates of response to CTLA-4 blockade in metastatic melanoma. Science 350, 207–211.

- T.J. Ritchie, I.M. McLay Should medicinal chemists do molecular modelling?

Drug Discov. Today, 17 (2012), pp. 534-537 - E.J. Griffen, et al. Chemists: AI is here, unite to get the benefits J. Med. Chem. (2020), 10.1021/acs.jmedchem.0c00163

- Lavecchia, A. and Di Giovanni, C. Virtual screening strategies in drug discovery: a critical review. Curr. Med. Chem. 20, 2839–2860.

- https://lifescivc.com/2017/04/four-decades-hacking-biotech-yet-biology-still-consumes-everything/ (accessed 4 May 2020).

- R.S. Bohacek, et al. The art and practice of structure‐based drug design: a molecular modeling perspective Med. Res. Rev., 16 (1999), pp. 3-50

- M. Ghandi, et al. Next-generation characterization of the Cancer Cell Line Encyclopedia

Nature, 569 (2019), pp. 503-508 - The ICGC/TCGA Pan-Cancer Analysis of Whole Genomes Consortium (2020) Pan-cancer analysis of whole genomes. Nature 578, 82–93.

- L.M. Sack, et al. Profound tissue specificity in proliferation control underlies cancer drivers and aneuploidy patterns Cell, 173 (2018), pp. 499-514

- B.A. Kozikowski, et al. The effect of freeze/thaw cycles on the stability of compounds in DMSO

J. Biomol. Screen., 8 (2003), pp. 210-215 - U. Ben-David, et al. Genetic and transcriptional evolution alters cancer cell line drug response

Nature, 560 (2018), pp. 325-330 - G.S. Kinker, et al. Pan-cancer single cell RNA-seq uncovers recurring programs of cellular heterogeneity Preprint at: https://www.biorxiv.org/content/10.1101/807552v1 (2019)

- C. Kramer, et al. The experimental uncertainty of heterogeneous public K(i) data

J. Med. Chem., 55 (2012), pp. 5165-5173 - I. Cortés-Ciriano, A. Bender How consistent are publicly reported cytotoxicity data? Large-scale statistical analysis of the concordance of public independent cytotoxicity measurements

ChemMedChem, 11 (2016), pp. 57-71 - S.P. Brown, et al. Healthy skepticism: assessing realistic model performance

Drug Discov. Today, 14 (2009), pp. 420-427 - H.T.N. Tran, et al.A benchmark of batch-effect correction methods for single-cell RNA sequencing data Genome Biol., 21 (2020), p. 12

- R. Macarron, et al. Impact of high-throughput screening in biomedical research

Nat. Rev. Drug Discov., 10 (2011), pp. 188-195 - D.C. Swinney, J. Anthony How were new medicines discovered? Nat. Rev. Drug Discov., 10 (2011), pp. 507-519

- J.W Scannell, J. Bosley When quality beats quantity: decision theory, drug discovery, and the reproducibility crisis PLoS One, 11 (2016), Article e0147215

- C.H. Wong, et al. Estimation of clinical trial success rates and related parameters Biostatistics, 20 (2019), pp. 273-286

- J. Mestre-Ferrandiz, et al. The R&D Cost of a New Medicine OHE (2012)

- S.M Paul, et al. How to improve R&D productivity: the pharmaceutical industry’s grand challenge Nat. Rev. Drug Discov., 9 (2010), pp. 203-214

- P. Morgan, et al. Impact of a five-dimensional framework on R&D productivity at AstraZeneca

Nat. Rev. Drug Discov., 17 (2018), pp. 167-181 - A. Lin, et al. Off-target toxicity is a common mechanism of action of cancer drugs undergoing clinical trials Sci. Transl. Med., 11 (2019), Article eaaw8412

- M. Woo An AI boost for clinical trials Nature, 573 (2019), pp. S100-S102

- V. Quirke Putting theory into practice: James Black, receptor theory and the development of the beta-blockers at ICI, 1958–1978 Med. Hist., 50 (2006), pp. 69-92

- J.C. Venter, et al. The sequence of the human genome Science, 291 (2001), pp. 1304-1351

- E. Lander, et al. Initial sequencing and analysis of the human genome Nature, 409 (2001), pp. 860-921

- F. Sams-Dodd Target-based drug discovery: is something wrong? Drug Discov. Today, 10 (2005), pp. 139-147

- Y. Lazebnik Can a biologist fix a radio?—Or, what I learned while studying apoptosis Cancer Cell, 2 (2002), pp. 179-182

- R. Heilker, et al. The power of combining phenotypic and target-focused drug discovery

Drug Discov. Today, 24 (2019), pp. 526-532 - Moffat, J.G. et al. Phenotypic screening in cancer drug discovery – past, present and future. Nat. Rev. Drug Discov. 13, 588–602.

- M. Schirle, J.L. Jenkins Identifying compound efficacy targets in phenotypic drug discovery

Drug Discov. Today, 21 (2016), pp. 82-89 - E.N. Feinberg, et al. PotentialNet for molecular property prediction ACS Cent. Sci., 4 (2018), pp. 1520-1530

- M. Torrice Ketamine is revolutionizing antidepressant research, but we still don’t know how it works C&EN News, 98 (2020), p. 3

- M.E. Kuruvilla, et al. Understanding asthma phenotypes, endotypes, and mechanisms of disease Clin. Rev. Allergy Immunol., 56 (2019), pp. 219-233

- R.M. Berman, et al. Antidepressant effects of ketamine in depressed patients Biol. Psychiatry, 47 (2000), pp. 351-354

- N.R. Williams, et al. Attenuation of antidepressant and antisuicidal effects of ketamine by opioid receptor antagonism Mol. Psychiatry, 24 (2019), pp. 1779-1786

- G. Yoon, et al. Association of combined naltrexone and ketamine with depressive symptoms in a case series of patients with depression and alcohol use disorder JAMA Psychiatry, 76 (2019), pp. 337-338

- P. Zanos, et al. NMDAR inhibition-independent antidepressant actions of ketamine metabolites

Nature, 533 (2016), pp. 481-486 - J.P. Hughes, et al. Principles of early drug discovery Br. J. Pharmacol., 162 (2011), pp. 1239-1249

- N. Perrin Preclinical research: make mouse studies work Nature, 507 (2014), pp. 423-425

- J. Bailey, et al. An analysis of the use of animal models in predicting human toxicology and drug safety Altern. Lab. Anim., 42 (2014), pp. 181-199

- D. Siolas, G.J. Hanno Patient derived tumor xenografts: transforming clinical samples into mouse models Cancer Res., 73 (2013), pp. 5315-5319

- K. Bera, et al. Artificial intelligence in digital pathology — new tools for diagnosis and precision oncology Nat. Rev. Clin. Oncol., 16 (2019), pp. 703-715

- M. Zimmermann, et al. Separating host and microbiome contributions to drug pharmacokinetics and toxicity Science, 363 (2019), Article eaat9931

- P. Horvath, et al. Screening out irrelevant cell-based models of disease Nat. Rev. Drug Discov., 15 (2016), pp. 751-769

- R.J. Weaver, J.-P. Valentin Today’s challenges to de-risk and predict drug safety in human “mind-the-gap” Toxicol. Sci., 167 (2019), pp. 307-321

- I.W.Y. Mak, et al. Lost in translation: animal models and clinical trials in cancer treatment

Am. J. Transl. Res., 6 (2014), pp. 114-118 - J. Shi, et al. The fidelity of cancer cells in PDX models: characteristics, mechanism and clinical significance Int. J. Cancer, 146 (2020), pp. 2078-2088

- C.H.C. Leenaars, et al. Animal to human translation: a systematic scoping review of reported concordance rates J. Transl. Med., 17 (2019), p. 223

- K. Han, et al. CRISPR screens in cancer spheroids identify 3D growth-specific vulnerabilities

Nature, 580 (2020), pp. 136-141 - H. Gao, et al. High-throughput screening using patient-derived tumor xenografts to predict clinical trial drug response Nat. Med., 21 (2015), pp. 1318-1325

- Schneider, G. and Clark, D.E. Automated de novo drug design: are we nearly there yet? Angew. Chem. Int. Ed. 58, 10792–10803.

- I.W. Davies The digitization of organic synthesis Nature, 570 (2019), pp. 175-181

- D.A Filimonov, et al. The computerized prediction of the spectrum of biological activity of chemical compounds by their structural formula: the PASS system. Prediction of Activity Spectra for Substance Eksp. Klin. Farmakol., 58 (1995), pp. 56-62

- M.J. Keiser, et al. Relating protein pharmacology by ligand chemistry Nat. Biotechnol., 25 (2007), pp. 197-206

- Schneider, P. and Schneider, G. A computational method for unveiling the target promiscuity of pharmacologically active compounds in silico. Angew. Chem. Int. Ed. 56, 11520–11524.

- L.H. Mervin, et al. Target prediction utilising negative bioactivity data covering large chemical space J. Cheminf., 7 (2015), p. 51

- A. Mayr, et al. Large-scale comparison of machine learning methods for drug target prediction on ChEMBL Chem. Sci., 9 (2018), pp. 5441-5451

- E.B. Lenselink, et al. Beyond the hype: deep neural networks outperform established methods using a ChEMBL bioactivity benchmark set J. Cheminf., 9 (2017), p. 45

- J. Simm, et al. Repurposing high-throughput image assays enables biological activity prediction for drug discovery Cell Chem. Biol., 25 (2018), pp. 611-618

- M.A. Trapotsi Multitask bioactivity predictions using structural chemical and cell morphology information

Preprint at: (2020), 10.26434/chemrxiv.12571241.v1 - T.R. Lane, et al. A very large-scale bioactivity comparison of deep learning and multiple machine learning algorithms for drug discovery Preprint at: (2020), 10.26434/chemrxiv.12781241.v1

- M.C. Robinson, et al. Validating the validation: reanalyzing a large-scale comparison of deep learning and machine learning models for bioactivity prediction J. Comput. Aid. Mol. Des. (2020), 10.1007/s10822-019-00274-0

- M.R. Nelson, et al. The support of human genetic evidence for approved drug indications

Nat. Genet., 47 (2015), pp. 856-860 - E.A. Boyle, et al. An expanded view of complex traits: from polygenic to omnigenic

Cell, 169 (2017), pp. 1177-1186 - H.E. Francies, et al. Genomics-guided pre-clinical development of cancer therapies

Nat. Cancer, 1 (2020), pp. 482-492 - F.M. Behan, et al. Prioritization of cancer therapeutic targets using CRISPR-Cas9 screens

Nature, 568 (2019), pp. 511-516 - D.A. Nathanson, et al. Targeted therapy resistance mediated by dynamic regulation of extrachromosomal mutant EGFR DNA Science, 343 (2014), pp. 72-76

- V.R. Knight-Schrijver, et al. The promises of quantitative systems pharmacology modelling for drug development Comp. Struct. Biotech. J., 14 (2016), pp. 363-370

- https://www.ibm.com/blogs/research/2020/08/roborxn-automating-chemical-synthesis/, accessed 7 September 2020.

- C.W. Coley, et al. A robotic platform for flow synthesis of organic compounds informed by AI planning Science, 365 (2019), p. 557

- C.A. Nicolaou, N. Brown Multi-objective optimization methods in drug design Drug Discov. Today Technol., 10 (2013), pp. e427-e435

- Z. del Rosario, et al. Assessing the frontier: active learning, model accuracy, and multi-objective candidate discovery and optimization J. Chem. Phys., 153 (2020), Article 024112

- C. Sutton, et al. Identifying domains of applicability of machine learning models for materials science Nat. Commun., 11 (2020), p. 4428

- V. Gulshan, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs JAMA, 316 (2016), pp. 2402-2410

- M. Voets, et al. Replication study: development and validation of deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs

Preprint at: https://arxiv.org/abs/1803.04337v1 (2018) - E. Raff A step toward quantifying independently reproducible machine learning research

Preprint at: https://arxiv.org/abs/1909.06674 (2019) - G. Valdes, Y. Interian Comment on ‘Deep convolutional neural network with transfer learning for rectum toxicity prediction in cervical cancer radiotherapy: a feasibility study’ Phys. Med. Biol., 63 (2018), Article 068001